Table of Contents

- The Art of Conversation: A Brief Look at Prompt Engineering

- The Limits of Lone Prompts: Challenges in Advanced AI Systems

- Enter Model Context Protocol (MCP): A New Paradigm for AI Communication

- How MCP Revolutionizes Prompt Engineering

- Key Components of MCP: The Building Blocks of Better Prompts

- Practical Applications: Where MCP Shines

- The Future of Prompt Engineering: Smarter AI Through Structured Context

- Conclusion

Conversations are the heartbeat of human connection. In the era of AI, they hold even greater promise.

Prompt engineering once thrived on single instructions. But as AI systems grow complex, lone prompts start to crack under pressure.

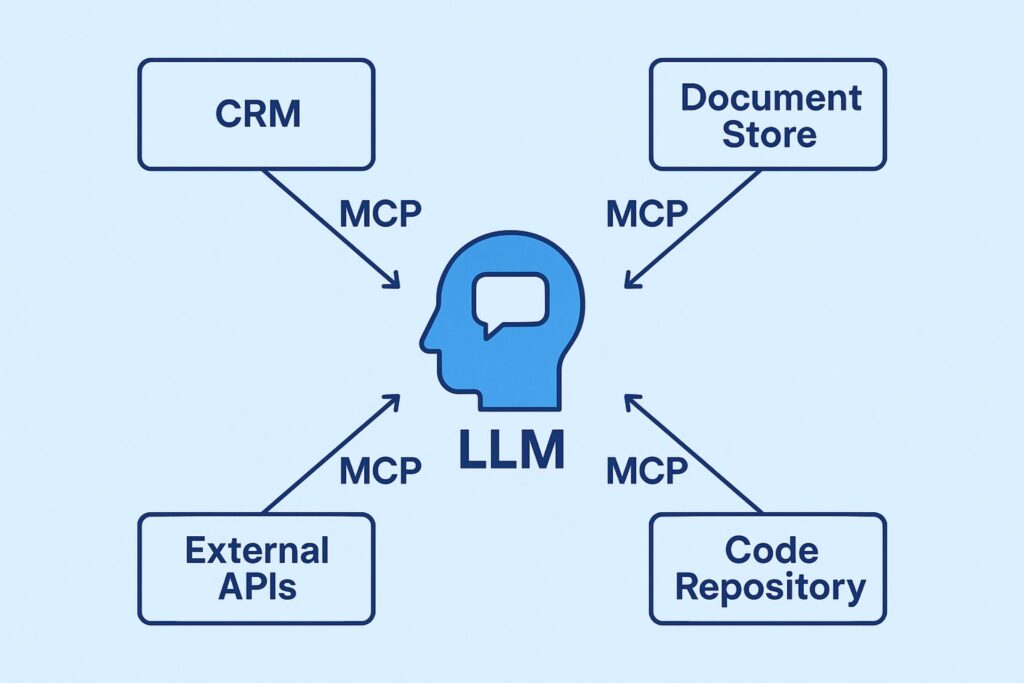

Enter Model Context Protocol (MCP), a fresh approach that layers context and clarity for richer AI dialogues.

From streamlined prompt structures to seamless multi-turn exchanges, MCP is redefining how we speak to machines.

Join us as we explore this new paradigm, dive into key components, and reveal real-world applications that promise smarter, more intuitive AI conversations. Let’s embark on the next frontier of AI collaboration.

The Art of Conversation: A Brief Look at Prompt Engineering

Imagine you’re talking to a friend who knows almost everything. You ask a question, they answer, and then you refine your follow-up. That’s basically what prompt engineering is all about. It’s the practice of crafting inputs—prompts—that guide AI models to produce the answers, stories, or code you need. In other words, it’s less about tricks and more like mastering the give-and-take of a dialogue.

In the earliest days of large language models, people discovered that even subtle wording changes could dramatically alter an AI’s response. Swap “Explain quantum physics like I’m five” for “Describe quantum mechanics simply,” and you might get an entirely different tone or depth. Prompt engineering emerged as the art of experimenting with phrasing, ordering, and context to hit that sweet spot.

Behind the scenes, AI models parse your prompt token by token, weighing probabilities to predict the next word. But when you treat prompts as mere text, you miss out on their real power. Effective prompt engineers consider intent, structure, and reference points. They might include example inputs and outputs, define the role the model should play (teacher, translator, brainstorming partner), and set constraints—like word limits or formatting rules.

What makes it feel like an art is iteration. You start broad, see what the model returns, then tweak. Maybe you add a heading or specify “use bullet points.” Maybe you inject a bit of humor. With each pass, the AI gets closer to what you envisioned. And while it might feel like trial and error at first, seasoned prompt engineers learn to anticipate how models interpret nuance—turning a few well-chosen words into a reliable conversational flow.

The Limits of Lone Prompts: Challenges in Advanced AI Systems

Relying on a single, standalone prompt can feel like sending a lone messenger into a crowded city. You hope it reaches the right destination, but without clear signposts, it often gets lost. As AI models tackle more complex tasks, these lone prompts start to hit walls.

Context Window Constraints

Every model has a finite context window. Long or multi-step instructions risk being truncated or ignored. When important details disappear off the token limit, the AI responds with gaps. You get incomplete answers or unrelated digressions.

One-Size-Fits-All Pitfalls

A generic prompt might work for simple queries, but quickly falls short when nuance matters. Ambiguities creep in. The model can misinterpret your intent or latch onto the wrong detail. This inconsistency makes it hard to rely on lone prompts for precise or specialized outcomes.

Finally, as tasks grow in scope, chaining multiple prompts together becomes messy. You end up wrangling context between calls, stitching responses, and managing state by hand. It slows you down and introduces more opportunities for error.

These challenges reveal a clear need: a more structured way to feed context into AI. One that keeps every detail aligned without forcing you to babysit each token. And that’s where the next breakthrough comes in.

Enter Model Context Protocol (MCP): A New Paradigm for AI Communication

In the fast-evolving world of AI, MCP emerges as the game-changer we’ve been waiting for. It’s not just another way to write prompts. MCP reimagines how we build, structure, and sustain conversations with models over time.

From One-Off Prompts to Continuous Dialogue

Think of traditional prompts as single snapshots. You ask, the model replies, and then everything resets. MCP flips that on its head by treating each exchange as part of an ongoing story. Context isn’t lost—it accumulates, evolves, and informs future responses.

Layered Context for Clearer Instructions

MCP breaks conversations into distinct layers. A system layer sets the AI’s role and guardrails. A user layer captures preferences, past answers, or goals. A memory layer stores key facts from earlier turns. This separation keeps each piece of information organized and easy to update.

Modular Blocks You Can Swap In and Out

One of MCP’s standout features is modularity. Want to change the tone from formal to playful? Swap out the tone block. Need to feed in new domain data? Replace the data block without touching the rest. You no longer have to rewrite entire prompts for every tweak.

Metadata Makes Context Unambiguous

Every context block in MCP comes with metadata—labels, timestamps, relevance scores. The model knows which instructions are critical and which details are optional. That reduces misunderstandings and keeps conversations on track, even when they stretch across dozens of turns.

Why This Matters for AI Communication

By standardizing how context is defined and managed, MCP tackles common pain points like context drift, forgotten instructions, and conflicting directives. The result is a smoother, more coherent interaction that feels closer to chatting with a human than ever before.

How MCP Revolutionizes Prompt Engineering

With MCP, prompt engineering leaps from one-off instructions to a dynamic conversation framework. Instead of shoehorning every detail into a single prompt, you break context into manageable, reusable segments. The model no longer treats each input as an island. Context flows freely, and responses become more coherent.

MCP introduces a protocol layer that handles context exchange. You define metadata, state variables, user preferences, or task-specific cues in dedicated channels. The AI then references these channels when crafting its output. It’s like giving the model a roadmap rather than a scribbled note.

This shift slashes prompt brittleness. You no longer tweak a giant blob of text every time the user’s goal changes. Add or update one context segment, and the rest of the conversation automatically adjusts. Less rewriting. Faster iterations. Clearer results.

Another game-changer: multi-model orchestration. MCP makes it simple to chain different AI engines—one for data extraction, another for creative prose, a third for factual validation. The protocol keeps each model’s context in sync. Outputs stay aligned, even when multiple engines contribute to the same thread.

Debugging and collaboration become far easier, too. Context segments are versioned. Teams can review which piece of information led to a certain response. You can roll back a change or repurpose a module across projects. Prompt engineering moves from art to science, with measurable, repeatable building blocks.

Key Components of MCP: The Building Blocks of Better Prompts

When you strip MCP down to its nuts and bolts, five elements stand out. Each one tackles a common pain point in prompt engineering. Combined, they transform scattered instructions into a coherent, context-rich conversation with your AI model.

Context Layers

Think of context layers as transparent sheets stacked on top of each other. Each sheet carries a different type of information—user goals, domain specifics, past interactions. By layering these sheets, MCP ensures your AI always “sees” the right background without overloading a single prompt.

This separation also makes it easy to update one layer without rewriting everything. Say you switch from marketing copy to legal advice—just swap that domain-specific layer, and the rest stays intact.

Dynamic Memory Slots

Static prompts quickly grow stale. Dynamic memory slots solve that by holding onto key details from previous turns—customer preferences, named entities, project deadlines. As the conversation unfolds, the AI taps into these memory slots to maintain continuity.

The magic here is automatic pruning. Irrelevant or outdated memories fade away. This keeps the model lean and prevents confusion from carrying forward obsolete facts.

Role-Based Tokens

With MCP, you assign “roles” to different parts of your prompt. A system role might enforce brand voice guidelines. A user role carries specific questions. A developer role can inject low-level instructions for few-shot examples or debugging tips.

These tokens act as signposts inside the prompt, signaling the model how to treat each snippet. The result is more predictable output. The AI knows which bits to treat as hard rules and which to improvise around.

Adaptive Instruction Sets

Rather than hardcoding a single instruction, MCP lets you define instruction sets that adapt on the fly. If the AI’s answer drifts off-topic, you can introduce a corrective instruction mid-conversation. If it’s nailing the style but misinterpreting a term, tweak that term in your instruction set.

This adaptive layer is like having a real-time coach whispering tips to the model. It keeps the AI agile and responsive to changing requirements.

Feedback Hooks

Collecting feedback is crucial, but it often feels tacked on. MCP builds feedback hooks directly into the protocol. After each response, you can trigger a quick validation step—rating relevance, checking tone, or confirming facts.

These hooks feed back into dynamic memory or prompt layers, refining future outputs. Over time, your AI gets better without manual retraining cycles.

By combining context layers, dynamic memories, role tokens, adaptive instructions, and feedback hooks, MCP gives you a modular, robust framework. Each component plays a distinct role, but together they form a cohesive engine for smarter, more reliable prompts.

Practical Applications: Where MCP Shines

When it comes to real-world use cases, Model Context Protocol really starts to show its true colors. By handing off structured chunks of information instead of raw text prompts, MCP makes AI interactions more reliable, consistent, and context-aware. Let’s dive into a few areas where this fresh approach transforms workflows.

Enhanced Customer Support Bots

Imagine a helpdesk chatbot that never loses track of a user’s issue. With MCP, context such as prior tickets, product specs, and support policies can be packaged neatly before every exchange. The result? Fewer “I’m sorry, can you repeat that?” moments and faster resolutions. Support teams see reduced ticket escalations and happier customers overall.

Dynamic Content Creation

Writers and marketers often wrestle with brief inconsistencies—tone drift, missed brand guidelines, or disjointed style. MCP tackles this by bundling up tone rules, brand lexicons, and campaign goals as discrete context modules. The AI then weaves them into blog posts, ad copy, or social updates without forgetting a beat. The final copy feels cohesive, on-brand, and tailored to the target audience.

Efficient Data Analysis and Reporting

Data scientists can leverage MCP to streamline data-driven insights. Instead of crafting sprawling prompts about dataset schemas, filter criteria, and visualization preferences each time, you load these elements once via context modules. From there, the model can pivot across tasks—summary statistics, trend analysis, anomaly detection—while keeping all the parameters neatly in scope.

Personalized Learning Experiences

Educational platforms thrive on adapting to individual student needs. By feeding tailored lesson plans, learning objectives, and assessment histories through MCP, AI tutors can maintain continuity across study sessions. Students get explanations pitched at the right level, with built-in scaffolding that evolves as they progress—and educators can monitor improvement without repetitive prompt engineering.

Collaborative Decision Making in Teams

In brainstorming or strategic planning sessions, keeping everyone on the same page is critical. MCP acts like a shared workspace of context: background research, stakeholder profiles, project constraints. Team members invoke the AI facilitator, and it seamlessly integrates all these context blocks. Discussions stay focused, action items are clearly defined, and follow-up prompts don’t require rehashing the basics.

The Future of Prompt Engineering: Smarter AI Through Structured Context

As AI systems grow more capable, the one-size-fits-all prompt just won’t cut it. We’re headed toward an era where every interaction is fueled by finely tailored context packets. Model Context Protocol (MCP) lays the groundwork for prompts that adapt on the fly, weaving together data, user preferences, and real-time signals.

Imagine an AI assistant that instantly shifts tone and depth based on your reading habits. Or a design tool that auto-injects brand guidelines as you sketch. That’s the power of structured context. Rather than dumping a wall of instructions into a single prompt, MCP lets us layer in context modules—each one a self-contained snippet of relevance.

Personalized Context Pipelines

One of the next big leaps is context pipelines that learn and adapt with you. Today you might manually tag relevant documents or tweak system messages. Tomorrow, MCP-driven agents will automatically surface the right context bundles—your calendar events, brand assets, industry glossaries—without you lifting a finger.

These pipelines aren’t static. They evolve as the AI tracks your goals: marketing copy in the morning, technical specs in the afternoon, and creative brainstorming at night. Each session builds on the last, making conversations feel more intuitive and less robotic.

Cross-Model Synergy

MCP also opens the door to true multi-model collaboration. Picture a vision model that tags and summarizes images, feeding those insights directly into a language model prompt. Or a speech-to-text engine that pre-filters audio snippets before passing them along. Structured context becomes the universal translator, helping diverse AI models speak the same language.

By standardizing how context is packaged and shared, MCP ensures that no matter which model you call upon, it’ll understand exactly what you need—and how to deliver it.

Adaptive Feedback Loops

In the near future, prompts won’t be static instructions; they’ll be living documents. As an AI responds, it can annotate which context modules were most useful—and which fell flat. MCP’s feedback hooks let us refine those modules in real time, sharpening accuracy and reducing wasted computation.

This adaptive loop means every interaction is also a teaching moment. Models learn which context fragments drive the best outcomes, continuously optimizing the protocol for future users.

Structured context under MCP is more than a technical upgrade—it’s a philosophy shift. We’re moving toward AI that truly “gets” what we need, when we need it, and how we want it. And this is just the beginning.

Conclusion

MCP brings context and clarity to every AI exchange.

By layering structured prompts, it turns single-shot instructions into fluid, multi-turn dialogues.

This shift not only boosts performance but also deepens our collaboration with intelligent systems.

From customer support to creative ideation, MCP’s impact is already visible.

Whether you’re a developer or a strategist, now is the time to experiment. Dive in, iterate, and watch your AI tools come alive.

The future of AI conversation depends on how thoughtfully we guide these machines.

With MCP, we hold the key to smarter, richer, and more human-centric dialogues.